Building a Cloud Development Platform with Coder

Overview

Modern development workflows demand flexibility, consistency, and powerful tooling. Cloud development environments solve these challenges by providing on-demand, standardized workspaces that developers can access from anywhere. This post explores how I built a production-ready cloud development platform using Coder, Kubernetes, and homelab infrastructure.

What is Coder?

Coder is an open-source platform that provisions cloud development environments. Think of it as “development workspaces as a service” - developers can spin up fully-configured development machines on-demand, access them via SSH or web-based IDEs, and destroy them when done.

Key Benefits

- Consistent Environments: Every developer gets the same configuration

- Resource Efficiency: Share compute resources across the team

- Quick Onboarding: New developers get productive environments in minutes

- Secure Access: Centralized access control and audit logging

- Cost Control: Automatically stop idle workspaces to save resources

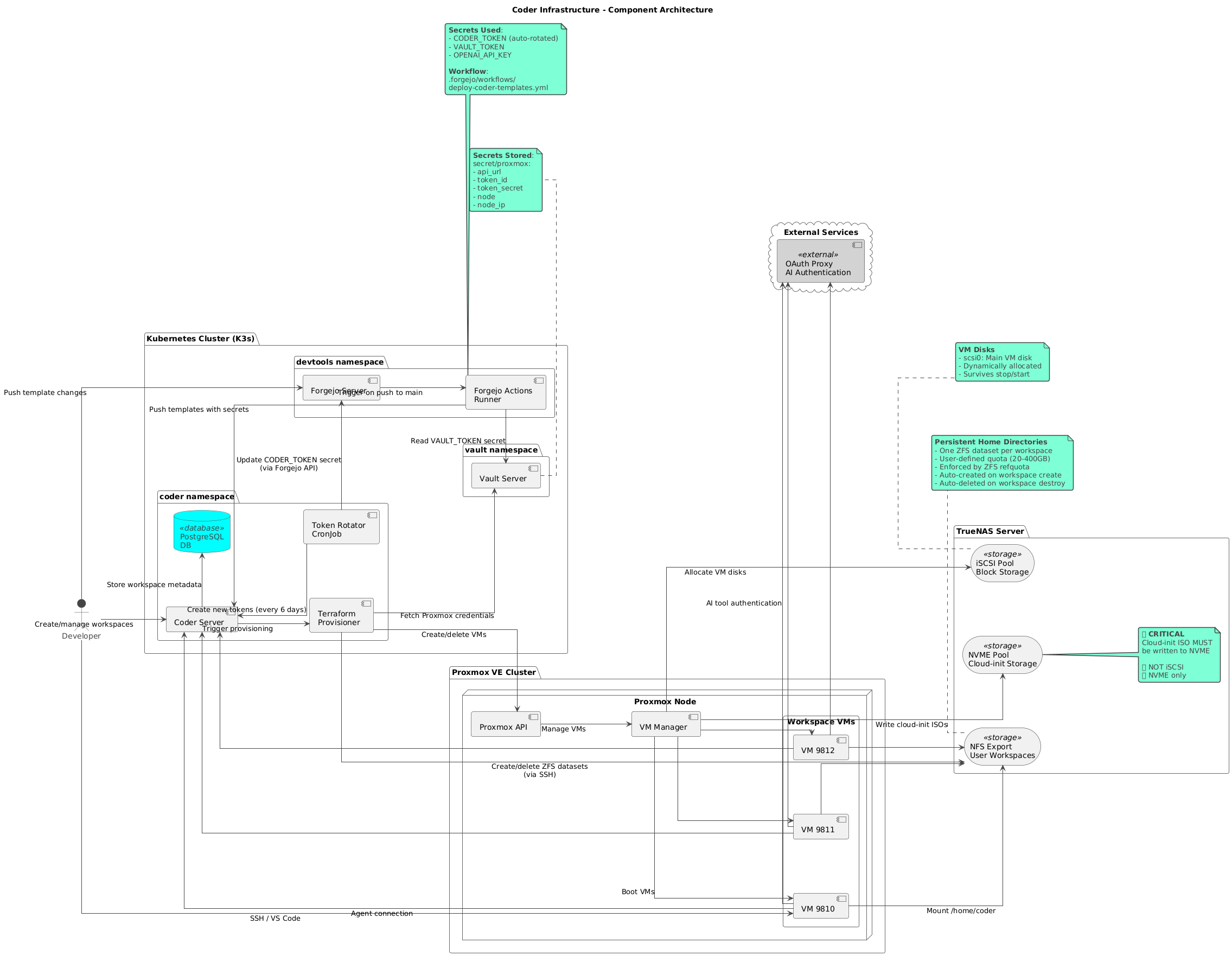

Infrastructure Architecture: The Complete Picture

The Coder platform runs on a sophisticated homelab infrastructure that demonstrates enterprise-grade architecture principles. Understanding the underlying infrastructure is critical to appreciating the platform’s capabilities and reliability.

Multi-Layer Architecture Overview

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

+================================================================+

| Kubernetes Control Plane (K3s) |

| - Coder Server (2 replicas) |

| - PostgreSQL Database |

| - Vault (Secrets Management) |

| - Forgejo (Git/CI/CD) |

| - cliProxy (OAuth to API Key Translation) |

+================================================================+

|

↓

+================================================================+

| Proxmox VE Cluster (Multiple Nodes) |

| - Workspace VM provisioning |

| - Resource allocation (CPU, RAM, Disk) |

| - Network management |

| - High availability and live migration |

| - NVMe local storage (ZFS replicated across nodes) |

+================================================================+

|

↓

+================================================================+

| TrueNAS Storage Cluster (Multiple Servers) |

| - NFS home directories (persistent user data) |

| - iSCSI block storage (VM disks) |

| - ZFS datasets with quotas |

+================================================================+

🔥 Critical Infrastructure Detail: NVMe Storage Architecture

The NVMe storage referenced in the Proxmox layer is a key component of the high-availability design:

- Local NVMe on Each Proxmox Node: Each Proxmox node has its own NVMe storage pool

- Identical Storage Names: All nodes use the same storage pool name (e.g., “nvme-storage”)

- ZFS Replication: ZFS replication keeps data synchronized across all Proxmox nodes

- Kubernetes Cluster Storage: This replicated NVMe storage hosts the entire K3s cluster:

- Control plane nodes

- Gateway nodes

- Worker nodes

- All Kubernetes persistent volumes

Why This Matters:

This architecture enables true high availability - if a Proxmox node fails, the Kubernetes VMs can seamlessly migrate to another node because the NVMe storage is replicated. The identical storage naming means Proxmox sees the same storage pool name on every node, making live migration transparent.

Coder Deployment Flow:

- Kubernetes cluster runs on replicated NVMe across Proxmox nodes

- Coder Server deploys within this K3s cluster

- Coder provisions workspace VMs on Proxmox using available resources

- Workspace VMs use TrueNAS for persistent home directories (NFS)

- Workspace VM disks are stored on TrueNAS iSCSI

Kubernetes Layer: Coder Control Plane

Cluster Configuration:

- Platform: K3s (lightweight Kubernetes)

- Node Count: 3 nodes (1 control plane + 2 workers)

- Networking: Flannel CNI with 10GbE backend

- Storage: Local path provisioner for persistent volumes

- Load Balancing: Traefik ingress controller

Deployed Services:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

# Coder Server

replicas: 2

resources:

cpu: 2 cores per pod

memory: 4GB per pod

storage: PostgreSQL on persistent volume

# PostgreSQL

replicas: 1 (with backup strategy)

storage: 100GB persistent volume

backup: Daily snapshots to TrueNAS

# Vault

replicas: 1

storage: Persistent KV store

purpose: Proxmox credentials, API keys, secrets

# Forgejo

replicas: 1

storage: Git repositories on persistent volume

runners: 2 Forgejo Actions runners for CI/CD

# cliProxy

replicas: 2 (load balanced)

purpose: OAuth → API key translation for AI services

High Availability Considerations:

- Coder Server runs with 2 replicas for redundancy

- PostgreSQL has automated daily backups

- K3s control plane can be restored from etcd snapshots

- Traefik provides automatic failover for ingress

Proxmox VE Cluster: Compute Layer

Five-Node High-Availability Cluster:

The platform runs on a 5-node Proxmox VE cluster providing 170 CPU cores and 1.1 TB of RAM for workspace VMs and infrastructure services.

Node 1 (Primary Compute - High-Density)

- CPU: 80 cores (dual AMD EPYC or high-core-count Xeon)

- RAM: 539 GB

- Storage: Local NVMe for VM disks

- Network: 10GbE bonded (LACP)

- Role: Primary workspace VM host, high-density workloads

- Current Load: ~14% CPU utilization

Node 2 (Secondary High-Performance)

- CPU: 40 cores (AMD EPYC/Ryzen or Xeon)

- RAM: 270 GB

- Storage: Local NVMe

- Network: 10GbE bonded

- Role: Production workloads, resource-intensive workspaces

- Current Load: ~3% CPU utilization

Node 3 (Tertiary Compute)

- CPU: 36 cores

- RAM: 270 GB

- Storage: Local NVMe

- Network: 10GbE bonded

- Role: General workspace hosting, HA quorum

- Current Load: ~7% CPU utilization

- Uptime: Exceptionally stable (weeks of continuous operation)

Node 4 (Management/Services)

- CPU: 8 cores

- RAM: 33 GB

- Storage: Local SSD

- Network: 10GbE

- Role: Infrastructure services, monitoring, lightweight workloads

- Current Load: ~6% CPU utilization

Node 5 (Edge/Development)

- CPU: 6 cores

- RAM: 16 GB

- Storage: Local SSD

- Network: 1GbE (separate location/network segment)

- Role: Dev/test workloads, edge computing, isolated workspaces

- Current Load: ~7% CPU utilization

Aggregate Cluster Capacity:

- Total CPU Cores: 170 cores (current avg utilization: <10%)

- Total RAM: 1.1 TB (current usage: ~487 GB / 44%)

- High Availability: 5-node quorum, supports 2 node failures

- Live Migration: VMs can migrate between nodes with zero downtime

- Storage Backend: iSCSI from TrueNAS cluster + local NVMe

- Network: Dedicated 10GbE storage VLAN, bonded management interfaces

- API Integration: Terraform provisioning via Vault-stored credentials

TrueNAS Storage Cluster: Persistence Layer

Four-Server Enterprise Storage Architecture:

The platform leverages four dedicated TrueNAS servers providing a combined 317+ TB of enterprise-grade ZFS storage with RAIDZ2 redundancy across all pools.

TrueNAS-01 (Primary NFS Server)

- Pool: Storage0

- Capacity: 43.64 TB usable (RAIDZ2, 6-drive array)

- Purpose: NFS home directories, workspace persistent data

- Network: 10GbE bonded (LACP)

- Datasets: Per-user ZFS datasets with quotas

- Export: NFSv4 with Kerberos authentication

- Current Usage: 65.82% (28.73 TB allocated)

- Snapshots: Automated hourly snapshots, 7-day retention

TrueNAS-02 (High-Capacity Storage)

- Pool 1 (storage01): 87.28 TB usable (RAIDZ2, 8-drive array)

- Pool 2 (storage02): 76.38 TB usable (RAIDZ2, 7-drive array)

- Total Capacity: 163.66 TB

- Purpose: Large dataset storage, backup targets, archive storage

- Network: 10GbE bonded

- Protocols: NFS, iSCSI, SMB

- Current Usage: 0.01% (practically empty, ready for expansion)

- Redundancy: Dual parity (RAIDZ2) - tolerates 2 disk failures per pool

TrueNAS-03 (VM Block Storage)

- Pool 1 (vmstore1): 6.53 TB usable (RAIDZ2, 6-drive array)

- Pool 2 (vmstore2): 16.34 TB usable (2x RAIDZ2 vdevs, 5 drives each)

- Total Capacity: 22.88 TB

- Purpose: iSCSI LUNs for Proxmox VM disks

- Network: 10GbE dedicated VLAN for storage traffic

- Performance: Low-latency iSCSI for production VMs

- Current Usage: vmstore1 at 53%, vmstore2 at 3%

- Fragmentation: vmstore1 at 46% (active VM workloads)

TrueNAS-04 (Expansion/Backup)

- Pool: tank

- Capacity: 87.28 TB usable (RAIDZ2, 8-drive array)

- Purpose: Replication target, disaster recovery, cold storage

- Network: 10GbE

- Current Usage: 0.13% (120 GB allocated)

- Replication: Receives snapshots from other TrueNAS servers

Aggregate Storage Capacity:

- Total Raw Capacity: 317.46 TB usable across all pools

- Total Allocated: ~33 TB (10.4% utilization)

- Total Available: ~284 TB ready for growth

- All Pools: RAIDZ2 redundancy (dual parity)

- Network Throughput: 10GbE across all servers

- Data Protection: Automated snapshots, scrubs, and cross-server replication

TrueNAS Storage Cluster: Persistence Layer

Multi-Server Architecture:

The platform uses multiple TrueNAS servers to provide distributed, redundant storage for different use cases:

TrueNAS Server 1 (Primary NFS)

- Purpose: NFS home directories for workspaces

- Storage: 8TB usable ZFS pool (RAIDZ2)

- Network: 2x 10GbE (LACP bonded)

- Datasets: Individual ZFS datasets per user with quotas

- Export: NFSv4 with Kerberos authentication

- Performance: ~900MB/s sequential read/write

- Snapshots: Hourly snapshots, retained for 7 days

TrueNAS Server 2 (iSCSI Block Storage)

- Purpose: iSCSI LUNs for Proxmox VM disks

- Storage: 16TB usable ZFS pool (RAIDZ2)

- Network: Dedicated 10GbE for iSCSI traffic

- LUNs: Block storage for workspace VM boot disks

- Performance: ~600MB/s with low latency

- Use Case: General development workspaces needing ample capacity

TrueNAS Server 3 (NVMe/SSD Pool)

- Purpose: High-performance NVMe-backed storage

- Storage: 4TB NVMe ZFS pool (mirrors)

- Network: 10GbE with RDMA support

- Export: NFSv4 + iSCSI for high-IOPS workloads

- Performance: ~2GB/s, <100μs latency

- Use Case: Database development, compilation-heavy workloads

Storage Selection in Templates:

Workspace templates allow developers to choose storage backend:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

data "coder_parameter" "storage_backend" {

name = "storage_type"

option {

name = "iSCSI (Ample Capacity)"

value = "iscsi"

description = "16TB pool, good for most workloads"

}

option {

name = "NVMe (High Performance)"

value = "nvme"

description = "4TB pool, ultra-low latency"

}

}

resource "proxmox_virtual_environment_vm" "workspace" {

disk {

datastore_id = var.storage_backend == "nvme" ? "nvme-pool" : "iscsi-pool"

}

}

NFS Home Directory Architecture:

All workspaces mount their home directory from TrueNAS Server 1 via NFS:

1

2

3

4

Workspace VM → 10GbE Network → TrueNAS NFS Server → ZFS Dataset

↓ ↓

/home/${username} /mnt/tank/coder/users/${username}/${workspace}

(NFS mount) (ZFS dataset with quota)

Benefits:

- True Persistence: Home directory survives workspace destruction

- Quota Enforcement: ZFS quotas prevent runaway disk usage

- Snapshots: Hourly snapshots for accidental deletion recovery

Data Persistence: The NFS Architecture That Changes Everything

The Problem with Ephemeral VMs

By default, Coder treats workspace VMs as ephemeral - when you stop, restart, or rebuild a workspace, the VM is destroyed and recreated from scratch. This is fantastic for ensuring clean, reproducible environments, but it creates a critical challenge: where does your data go?

Traditional solutions involve:

- Local VM storage: Lost on every rebuild

- Git repositories: Only code, not your entire development environment

- Manual backups: Time-consuming and error-prone

For a production-grade developer platform, we needed something better: true persistence that survives VM destruction while maintaining the clean-slate benefits of ephemeral infrastructure.

The Solution: External NFS + ZFS Dataset Lifecycle Management

The breakthrough came from separating compute (ephemeral VMs) from storage (persistent NFS mounts). Here’s how it works:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

┌─────────────────────────────────────────────────────────────────┐

│ Workspace Lifecycle │

├─────────────────────────────────────────────────────────────────┤

│ │

│ Workspace Created: │

│ ┌─────────────────────────────────────────────────────────┐ │

│ │ 1. Terraform: Run truenas-dataset-manager.sh create │ │

│ │ → Creates ZFS dataset: tank/coder/users/john/ws1 │ │

│ │ → Sets quota: 100GB (user-configurable) │ │

│ │ → Creates NFS share for dataset │ │

│ │ → Sets ownership: uid 1000, gid 1000 │ │

│ │ │ │

│ │ 2. Proxmox: Provision VM from template │ │

│ │ → CPU: 4 cores (slider: 1-8) │ │

│ │ → RAM: 8GB (slider: 2-32GB) │ │

│ │ → Disk: 40GB local (slider: 20-100GB) │ │

│ │ │ │

│ │ 3. Cloud-init: Mount NFS on first boot │ │

│ │ → mount 192.168.x.x:/mnt/tank/coder/users/john/ws1 │ │

│ │ → /home/${username} │ │

│ │ → All user data now on persistent NFS │ │

│ └─────────────────────────────────────────────────────────┘ │

│ │

│ Workspace Stopped/Started/Rebuilt: │

│ ┌─────────────────────────────────────────────────────────┐ │

│ │ VM Destroyed → Recreated from template │ │

│ │ NFS Dataset: UNTOUCHED - still exists on TrueNAS │ │

│ │ On boot: Re-mounts same NFS share │ │

│ │ Result: All files, configs, history preserved │ │

│ └─────────────────────────────────────────────────────────┘ │

│ │

│ Workspace DELETED: │

│ ┌─────────────────────────────────────────────────────────┐ │

│ │ 1. Terraform destroy triggers: │ │

│ │ → truenas-dataset-manager.sh delete john ws1 │ │

│ │ → Finds NFS share by path, deletes it │ │

│ │ → Runs: zfs destroy -r tank/coder/users/john/ws1 │ │

│ │ → Dataset and all data permanently removed │ │

│ │ │ │

│ │ 2. VM deleted from Proxmox │ │

│ └─────────────────────────────────────────────────────────┘ │

│ │

└─────────────────────────────────────────────────────────────────┘

The Magic Script: truenas-dataset-manager.sh

This Bash script is the heart of the persistence architecture. It manages the complete lifecycle of ZFS datasets and NFS shares through a clever SSH routing pattern:

Coder Server (K3s) → Proxmox Host → TrueNAS

The script provides four operations:

1. Create Dataset with Quota

1

2

3

4

5

6

7

8

9

10

/usr/local/bin/coder-scripts/truenas-dataset-manager.sh create john workspace-1 100

# What happens:

# 1. SSH to Proxmox (192.168.x.x)

# 2. From Proxmox, SSH to TrueNAS (192.168.x.x)

# 3. Create ZFS dataset: zfs create -p tank/coder/users/john/workspace-1

# 4. Set quota: zfs set refquota=100G tank/coder/users/john/workspace-1

# 5. Set ownership: chown -R 1000:1000 /mnt/tank/coder/users/john/workspace-1

# 6. Create NFS share via TrueNAS midclt API

# 7. Return to Coder, continue provisioning

2. Delete Dataset (Workspace Deletion Only)

1

2

3

4

5

6

7

8

/usr/local/bin/coder-scripts/truenas-dataset-manager.sh delete john workspace-1

# What happens:

# 1. SSH to Proxmox → TrueNAS

# 2. Find NFS share ID by path using midclt query

# 3. Delete NFS share: midclt call sharing.nfs.delete <id>

# 4. Destroy dataset: zfs destroy -r tank/coder/users/john/workspace-1

# 5. All user data for this workspace permanently removed

3. Update Quota (User Adjusts Slider)

1

2

3

4

/usr/local/bin/coder-scripts/truenas-dataset-manager.sh update-quota john workspace-1 200

# User increased storage from 100GB to 200GB

# ZFS immediately applies new quota without downtime

4. Check Quota (Monitoring)

1

2

3

4

5

6

/usr/local/bin/coder-scripts/truenas-dataset-manager.sh check-quota john workspace-1

# Returns:

# tank/coder/users/john/workspace-1 refquota 200G

# tank/coder/users/john/workspace-1 used 45.3G

# tank/coder/users/john/workspace-1 available 154.7G

Terraform Integration: The Critical Lifecycle Hooks

In each Coder template (main.tf), the dataset lifecycle is managed with Terraform’s null_resource and provisioners:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

resource "null_resource" "nfs_dataset" {

# CREATE: Run when workspace is created or quota changes

provisioner "local-exec" {

command = "/usr/local/bin/coder-scripts/truenas-dataset-manager.sh create ${data.coder_workspace_owner.me.name} ${data.coder_workspace.me.name} ${data.coder_parameter.storage_quota.value}"

}

# DELETE: Run ONLY when workspace is destroyed (not stopped!)

provisioner "local-exec" {

when = destroy

command = "/usr/local/bin/coder-scripts/truenas-dataset-manager.sh delete ${self.triggers.username} ${self.triggers.workspace_name} || true"

}

# Triggers: Recreate if these values change

triggers = {

workspace_name = data.coder_workspace.me.name

username = data.coder_workspace_owner.me.name

storage_quota = data.coder_parameter.storage_quota.value

}

}

# VM must wait for NFS dataset to be ready

resource "proxmox_virtual_environment_vm" "workspace" {

depends_on = [null_resource.nfs_dataset]

# VM configuration...

# On boot, cloud-init mounts the NFS share

}

Key Design Decision: The when = destroy provisioner only runs on workspace deletion, not on stop/start/rebuild. This means:

✅ Stop workspace: VM deleted, NFS dataset untouched

✅ Start workspace: New VM created, mounts existing NFS dataset, all data intact

✅ Rebuild workspace: Old VM deleted, new VM created, mounts existing NFS, data preserved

✅ Delete workspace: VM deleted, THEN NFS dataset deleted permanently

Why This Architecture is Brilliant

- True Persistence: Your entire

/home/${username}directory survives any VM operation except deletion - Clean Rebuilds: Destroy and recreate VMs freely without worrying about data loss

- Per-User Isolation: Each workspace gets its own ZFS dataset with quota enforcement

- Storage Flexibility: Users can adjust quotas with a slider (10GB to 500GB)

- ZFS Benefits:

- Compression: Automatic LZ4 compression saves space

- Snapshots: TrueNAS can snapshot datasets for backup/rollback

- Deduplication: Optional dedup across users

- Quota Enforcement: Hard limits prevent one user filling the pool

- Secure Deletion: When a workspace is deleted, ALL data is destroyed - no orphaned datasets

- Network Independence: NFS mount works across any Proxmox node

Real-World Example: Developer Workflow

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

# Day 1: Create workspace

coder create my-project --template proxmox-vm-codex

# → ZFS dataset created: tank/coder/users/john/my-project (100GB)

# → VM created and mounts NFS

# → Install tools, clone repos, configure environment

# Day 2: Stop workspace to save resources

coder stop my-project

# → VM destroyed

# → NFS dataset untouched

# Day 3: Start workspace

coder start my-project

# → New VM created from template

# → Mounts existing NFS dataset

# → All files, configs, Docker containers still there!

# Week 2: Need more storage

# Open Coder UI → Rebuild workspace → Adjust slider: 100GB → 200GB

# → Terraform detects trigger change

# → Runs: truenas-dataset-manager.sh update-quota john my-project 200

# → ZFS immediately applies new quota

# → VM rebuilt with more storage capacity

# Month 3: Project complete, delete workspace

coder delete my-project

# → VM destroyed

# → Terraform destroy provisioner triggered

# → truenas-dataset-manager.sh delete john my-project

# → NFS share removed

# → ZFS dataset destroyed

# → All data permanently deleted

The Multi-Hop SSH Pattern

One fascinating aspect of this architecture is how it works around network topology. The Coder server (running in K3s) cannot directly SSH to TrueNAS due to network segmentation. The solution:

1

2

3

4

5

6

7

8

┌──────────────┐ ┌──────────────┐ ┌──────────────┐

│ Coder Server │ ──SSH──→│ Proxmox Host │ ──SSH──→│ TrueNAS │

│ (K3s Pod) │ │ 192.168.x.x │ │ 192.168.x.x │

└──────────────┘ └──────────────┘ └──────────────┘

↓ ↓ ↓

Executes Has SSH access to Manages ZFS

Terraform TrueNAS (management datasets & NFS

templates network access) shares

The script uses nested SSH commands:

1

ssh [email protected] "ssh [email protected] 'zfs create -p tank/coder/users/john/ws1'"

This pattern works because:

- Coder server has SSH keys for Proxmox

- Proxmox has SSH keys for TrueNAS

- All authentication is key-based (no passwords)

- Commands execute seamlessly across the chain

Performance Considerations

Why NFS over iSCSI?

- NFS: File-level protocol, perfect for home directories

- iSCSI: Block-level protocol, overkill for developer workspaces

- NFS advantages:

- No complex multipath setup

- Works seamlessly across Proxmox nodes

- Easy backup (TrueNAS snapshots)

- Simpler quota management

Network Performance:

- TrueNAS connected via 10GbE to Proxmox cluster

- NFS over TCP for reliability

- ZFS ARC (Adaptive Replacement Cache) on TrueNAS provides excellent read performance

- Developer workloads are not I/O intensive enough to saturate NFS

Why This Matters

This persistence architecture is the foundation that makes Coder viable for production use. Without it, developers would:

- Lose work on every rebuild

- Fear VM maintenance

- Store everything in Git (even databases, configs, etc.)

- Need manual backup strategies

With this architecture, developers get:

- Confidence: Stop/start/rebuild freely without fear

- Flexibility: Adjust resources without data loss

- Isolation: Per-workspace storage quotas

- Clean Slate: Delete workspace = clean deletion, no orphaned data

- Enterprise-Grade: Same patterns used by cloud providers (EBS, Persistent Disks, etc.)

The beauty is that it’s 100% open source - ZFS, NFS, Terraform, Bash - no proprietary magic, just solid engineering.

- Performance: 10GbE provides excellent throughput for development workloads

- Mobility: Workspaces can be recreated on any Proxmox node with same home directory

Networking Architecture

Note: The VLAN IDs and subnet ranges shown below are examples for illustration purposes.

Network Segmentation:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

+================================================================+

| Management Network (Example: VLAN 10) |

| - Proxmox management interfaces |

| - TrueNAS management |

| - Kubernetes API server |

| Example Subnet: 192.168.10.x/24 |

+================================================================+

+================================================================+

| Workspace Network (Example: VLAN 20) |

| - Workspace VM primary interfaces |

| - Internet access (NAT) |

| - Inter-workspace communication |

| Example Subnet: 192.168.20.x/24 |

+================================================================+

+================================================================+

| Storage Network (Example: VLAN 30) |

| - NFS traffic (TrueNAS to Workspace VMs) |

| - iSCSI traffic (TrueNAS to Proxmox) |

| - 10GbE dedicated bandwidth |

| Example Subnet: 10.10.30.x/24 (high-performance routing) |

+================================================================+

+================================================================+

| Services Network (Example: VLAN 40) |

| - Kubernetes service network |

| - Coder agent communication |

| - WebSocket connections |

| Example Subnet: 192.168.40.x/24 |

+================================================================+

10GbE Backbone:

- All infrastructure components connected via 10 Gigabit Ethernet

- Storage network prioritized with QoS for NFS/iSCSI traffic

- Bonded interfaces on critical nodes for redundancy

- Jumbo frames (MTU 9000) enabled on storage network

Routing and Connectivity:

- Ubiquiti UniFi Network handling inter-VLAN routing

- NAT for workspace internet access

- Firewall rules restrict workspace → management network

- DNS handled by internal DNS server (Pi-hole or similar)

Reliability and Redundancy

Component Redundancy:

| Component | Redundancy Strategy | Recovery Time |

|---|---|---|

| Coder Server | 2 Kubernetes replicas | Instant (load balanced) |

| PostgreSQL | Daily backups + WAL archiving | <5 minutes |

| Proxmox Nodes | 4-node cluster with HA | <2 minutes (VM migration) |

| TrueNAS Storage | Multiple independent servers | Varies by storage tier |

| Network | Bonded 10GbE interfaces | Instant failover |

| Power | Dual PSU + UPS per server | Seconds |

Disaster Recovery Strategy:

- Kubernetes Cluster: etcd snapshots every 6 hours, stored on TrueNAS

- PostgreSQL Database: Daily full backups, point-in-time recovery enabled

- TrueNAS Datasets: ZFS replication to backup TrueNAS (hourly sync)

- Proxmox Configuration: Cluster config backed up weekly

- Workspace VMs: Ephemeral (can be recreated), data persisted on NFS

Backup Infrastructure:

1

2

3

4

Primary Infrastructure → Backup TrueNAS (off-site or isolated)

↓ ↓

ZFS Send/Receive Encrypted backups

Hourly replication 7-day retention

Monitoring and Observability

Infrastructure Monitoring:

- Prometheus: Metrics collection from Kubernetes, Proxmox, TrueNAS

- Grafana: Dashboards for infrastructure health, workspace usage

- Alertmanager: Notifications for infrastructure issues

- Loki: Log aggregation from all components

Key Metrics Tracked:

- Proxmox node CPU/RAM/disk utilization

- TrueNAS pool capacity and IOPS

- Kubernetes pod health and resource usage

- Workspace VM count and resource allocation

- Network bandwidth usage per VLAN

- NFS mount performance metrics

Scaling Considerations

Current Capacity:

- Concurrent Workspaces: ~40-50 with current resources

- Total Storage: ~28TB across 3 TrueNAS servers

- Network Throughput: 20Gbps+ aggregate (10GbE bonded)

- CPU Allocation: ~80 cores available for workspaces

Expansion Strategy:

- Horizontal: Add Proxmox nodes for more workspace capacity

- Vertical: Upgrade existing nodes (more RAM/CPU)

- Storage: Add TrueNAS servers or expand existing pools

- Network: 25GbE or 40GbE upgrade path available

Why This Architecture?

Separation of Concerns:

- Control Plane (K8s): Orchestration and management

- Compute (Proxmox): Workspace VM execution

- Storage (TrueNAS): Data persistence and quotas

Benefits of Multi-Layer Approach:

- Flexibility: Replace components independently

- Scalability: Scale compute and storage separately

- Reliability: Failure in one layer doesn’t cascade

- Performance: Optimize each layer for its workload

- Cost Efficiency: Use appropriate hardware for each role

This architecture demonstrates that sophisticated cloud-like infrastructure can be built on-premises with careful planning and the right open-source tools.

Authentication and Identity Flow

A critical aspect of the platform is how authentication and identity flow through the entire stack, from initial login to dataset provisioning. Everything is tied together through Authentik SSO - from Coder access to Vault secrets to workspace app links.

SSO with Authentik: The Central Identity Provider:

The platform uses Authentik as the central SSO (Single Sign-On) provider for ALL services:

1

2

3

4

5

6

7

8

9

Authentik SSO (Central Identity)

↓

+==========================================================+

↓ ↓ ↓

Coder Login Vault Access Workspace Apps

(Platform) (Secrets) (Tools/Services)

↓ ↓ ↓

Workspace Create API Keys Retrieval One-Click Access

Dataset Creation Proxmox Creds (links in Coder UI)

Complete Authentication Flow:

- User Accesses Coder

- Developer navigates to Coder web UI

- Coder redirects to Authentik SSO login page

- Authentik can integrate with: Google Workspace, Azure AD, Okta, GitHub, etc.

- SSO Authentication

- Developer authenticates via SSO provider (e.g., Google)

- Authentik validates credentials against configured provider

- Authentik issues OAuth/OIDC token with user identity

- Token includes:

username,email,groups,claims

- Coder Session Creation

- Coder receives authentication token from Authentik

- Coder validates token and extracts

username - Coder creates session and associates with username

- Username becomes the primary identity throughout the platform

- Workspace Provisioning

- Developer clicks “Create Workspace” in Coder UI

- Coder passes

usernameto Terraform provisioner - Terraform uses username as variable:

data.coder_workspace.me.owner

- Vault Integration (SSO-Protected)

- Vault also authenticates via Authentik SSO

- Terraform needs Proxmox credentials → Queries Vault

- Vault validates Terraform’s service account via Authentik

- Vault returns Proxmox API credentials for VM provisioning

- Future: User-specific secrets accessible via personal Vault login

- Dynamic Dataset Creation

- Terraform invokes TrueNAS dataset manager script

- Script receives username:

truenas-dataset-manager.sh create ${username} ${quota} - Script creates ZFS dataset:

/mnt/tank/coder-home/${username} - Script sets ZFS quota and creates NFS export

- Workspace VM Configuration

- Cloud-init mounts:

nfs-server:/mnt/tank/coder-home/${username}→/home/${username} - Coder agent connects with user identity

- Workspace becomes accessible

- Cloud-init mounts:

- Workspace App Links

- Coder UI shows clickable links to integrated services

- Click “Open Vault” → Authenticates via same Authentik SSO → Access personal secrets

- Click “Open Grafana” → SSO login → View workspace metrics

- Click “Open Git” → SSO to Forgejo → Access repositories

- All apps use the same SSO identity - no separate logins!

Identity Consistency Across All Services:

| Service | SSO via Authentik | Username Usage |

|---|---|---|

| Coder | ✅ Yes | Primary platform login, workspace owner |

| Vault | ✅ Yes (integrated) | Retrieve Proxmox creds, personal secrets |

| Forgejo | ✅ Yes | Git push/pull, CI/CD access |

| Grafana | ✅ Yes | View personal workspace metrics |

| cliProxy | ✅ Yes | OAuth → API key for AI services |

| TrueNAS | ❌ No (script-based) | Dataset creation via API |

| Proxmox | ✅ Yes (SSO enabled, access controlled) | Admins authenticate via Authentik SSO |

Note: Proxmox is integrated with Authentik SSO for administrator authentication. Workspace users do not have direct access to Proxmox - this demonstrates the power of SSO with proper access control. Authentication is centralized via Authentik, but access is segregated based on roles. Only platform administrators can log into Proxmox; workspace VMs are provisioned automatically via Coder using API credentials stored in Vault.

Workspace App Links: Enhanced Developer Experience:

When you open a workspace in Coder, the UI displays clickable app links:

1

2

3

4

5

6

7

8

9

10

11

12

+==========================================================+

| Workspace: crimson-mite-10 [Running] |

+==========================================================+

| Apps: |

| 🖥️ VS Code Desktop [Open in Browser] |

| 🤖 Codex AI [Open Terminal] |

| 💬 Droid AI [Chat Interface] |

| 🔐 Vault (SSO) [Open Secrets] |

| 📊 Grafana (SSO) [View Metrics] |

| 🔧 Forgejo (SSO) [Git Repos] |

| 📦 S3 Bucket [Object Storage] |

+==========================================================+

How App Links Work:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

# In Coder template - define app links

resource "coder_app" "vault" {

agent_id = coder_agent.main.id

display_name = "Vault (Personal Secrets)"

url = "https://vault.example.com"

icon = "https://vault.io/favicon.ico"

# Authentik SSO protects Vault access

# User clicks link → Redirects to Vault → Authentik SSO → Vault UI

}

resource "coder_app" "grafana" {

agent_id = coder_agent.main.id

display_name = "Workspace Metrics"

url = "https://grafana.example.com/d/workspace?var-user=${data.coder_workspace.me.owner}"

icon = "https://grafana.com/favicon.ico"

# Shows metrics for this specific workspace

# Pre-filtered by username via URL parameter

}

resource "coder_app" "s3_bucket" {

agent_id = coder_agent.main.id

display_name = "S3 Bucket"

url = "https://s3-console.example.com/buckets/${data.coder_workspace.me.owner}-workspace"

icon = "https://min.io/favicon.ico"

# Direct link to user's personal S3 bucket

}

Example User Journey:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

+===================+ +====================+ +==================+

| Developer | | Authentik SSO | | Coder UI |

| john.doe@ | | (Identity Hub) | | (Workspace |

| company.com | | | | Management) |

+===================+ +====================+ +==================+

| | |

| 1. Login Request | |

|===========================>| |

| | |

| 2. Authenticate | |

| (MFA if enabled) | |

|<===========================| |

| | |

| | 3. JWT Token |

| |============================>|

| | |

| | | 4. Create Workspace

| | | - Owner: john.doe

| | | - Dataset: /mnt/tank/coder-home/john.doe

| | | - S3: john.doe-workspace

| | |

| 5. Workspace Ready | |

|<=========================================================|

| | |

+================================ Workspace Active ================================+

| 6. Click [Open Vault] | |

|===========================>| |

| | |

| | 7. SSO to Vault |

|<===========================| |

| | |

+===================+ +====================+ +==================+

| 🔐 Vault | | 🏗️ Forgejo | | 📊 Grafana |

| (john.doe's | | (john.doe's | | (john.doe's |

| API keys) | | repositories) | | metrics) |

+===================+ +====================+ +==================+

↑ ↑ ↑

| | |

+----------------------------+-----------------------------+

All authenticated via Authentik SSO

All scoped to john.doe's permissions

What happens behind the scenes:

1

2

3

4

5

6

7

8

9

10

11

12

13

# Workspace provisioning flow:

john.doe logs in → Authentik SSO → Coder UI

# Workspace creation:

Owner: john.doe

Dataset: /mnt/tank/coder-home/john.doe (ZFS with 100GB quota)

S3 Bucket: john.doe-workspace (MinIO, auto-created)

# App link clicks use same SSO:

[Open Vault] → Authentik SSO → Vault UI → john.doe's secrets

[Open Grafana] → Authentik SSO → Grafana → john.doe's dashboards

[Open S3] → Direct link → S3 Console → john.doe-workspace bucket

[Open Forgejo] → Authentik SSO → Git repos → john.doe's repositories

Benefits of Unified SSO + App Links:

Security:

- One SSO provider (Authentik) for everything

- MFA enforced at central point

- Revoke access in one place = revoked everywhere

- No credentials stored in workspaces

Developer Experience:

- Single login - authenticate once, access everything

- No context switching - all tools accessible from Coder UI

- Personalized access - app links pre-filtered by username

- Instant access - click link, SSO happens automatically

Operational Excellence:

- Centralized identity - manage users in one place

- Audit trail - all access logged with real identity

- Automation - everything provisioned based on authenticated user

- Compliance - SSO audit logs for all system access

Vault SSO Integration:

Vault’s Authentik SSO integration enables:

1

2

3

4

5

Developer → Vault UI → Authentik SSO → Personal Namespace

↓

Personal Secrets (API keys, passwords)

Team Secrets (shared credentials)

Workspace Secrets (temporary tokens)

Future Enhancements:

- Workspace-Specific Vault Paths: Each workspace gets own Vault path

- Auto-Injected Secrets: Vault agent injects secrets into workspace

- Dynamic Database Creds: Vault generates temporary DB credentials per workspace

- Certificate Management: Vault issues short-lived TLS certs for workspace services

This creates a truly integrated platform where:

- ✅ Authenticate once via Authentik SSO

- ✅ Username flows through every layer automatically

- ✅ All services accessible via app links in Coder UI

- ✅ No separate logins for Vault, Grafana, Git, S3, etc.

- ✅ Complete audit trail tied to corporate identity

- ✅ Resources (datasets, buckets, secrets) automatically scoped to user

The combination of Authentik SSO, dynamic provisioning, and workspace app links creates an experience that rivals commercial cloud IDEs while maintaining complete control and security.

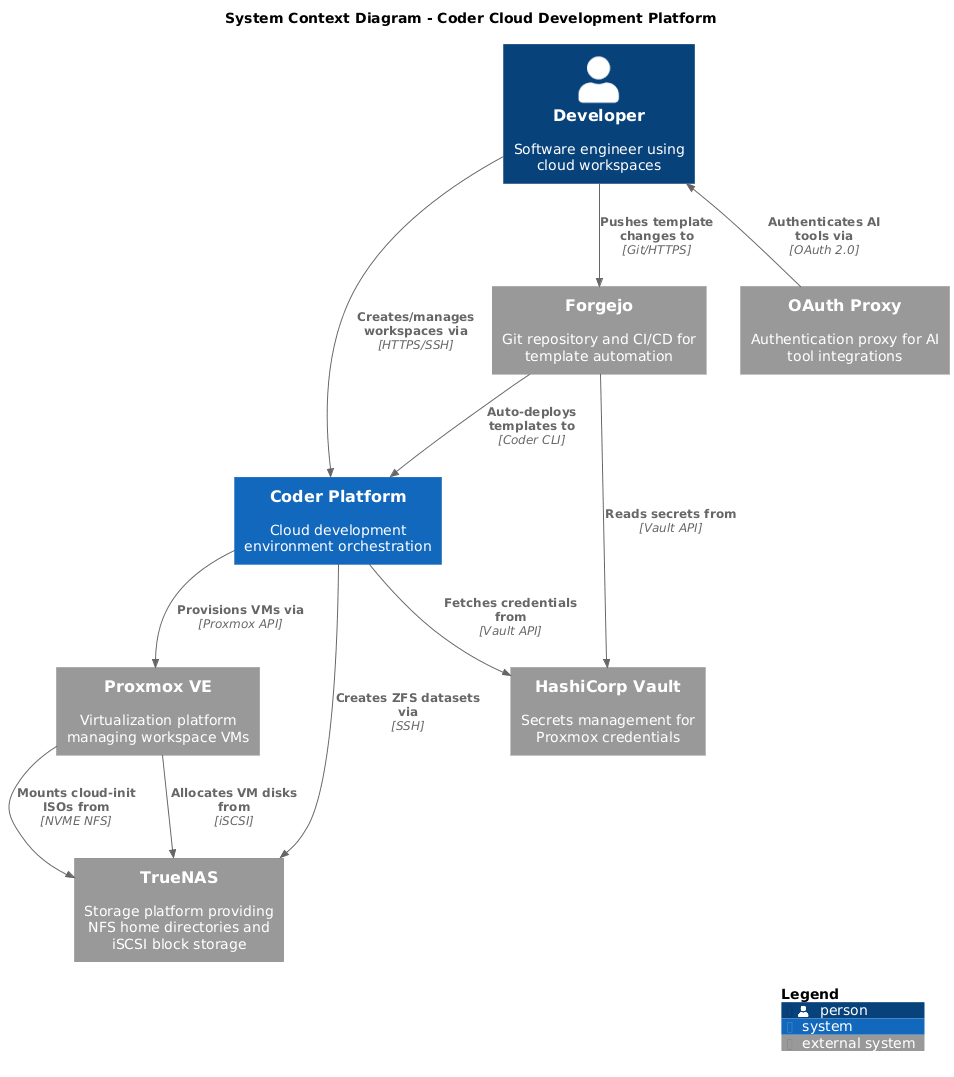

Architecture Overview

The platform consists of several integrated components working together to provide a seamless development experience.

Core Components

Coder Server (Kubernetes)

- Orchestrates workspace lifecycle

- Manages user authentication and authorization

- Provides WebSocket connectivity for agent communication

- Stores workspace metadata in PostgreSQL

Terraform Provisioner

- Provisions infrastructure declaratively

- Integrates with Proxmox for VM creation

- Manages storage via TrueNAS datasets

- Retrieves credentials securely from Vault

Workspace VMs (Proxmox)

- Ubuntu-based development environments

- NFS-mounted persistent home directories

- Customizable via template parameters

- Automatic startup/shutdown based on usage

Storage Backend (TrueNAS)

- ZFS datasets for each user

- NFS exports for workspace access

- Quota management per workspace

- Snapshot-based backups

System Context

The platform integrates with existing homelab services to provide a complete solution:

External Integrations

- Proxmox VE: Virtualization platform for workspace VMs

- TrueNAS: Network storage providing persistent home directories

- HashiCorp Vault: Secrets management for infrastructure credentials

- Forgejo: Git repository and CI/CD for template automation

- OAuth Proxy: Authentication for integrated tools

Container Architecture

The Kubernetes deployment provides high availability and scalability:

Kubernetes Components

coder-server

- Main Go application

- Handles API requests and WebSocket connections

- Manages workspace state transitions

postgres

- Stores workspace metadata

- User accounts and permissions

- Template versions and parameters

terraform

- Executes infrastructure provisioning

- Manages workspace resources

- Handles dependency resolution

vault

- Stores Proxmox API credentials

- Provides secure injection

- Rotates credentials automatically

token-rotator (CronJob)

- Rotates Coder API tokens every 6 days

- Updates Forgejo secrets automatically

- Ensures zero-downtime rotation

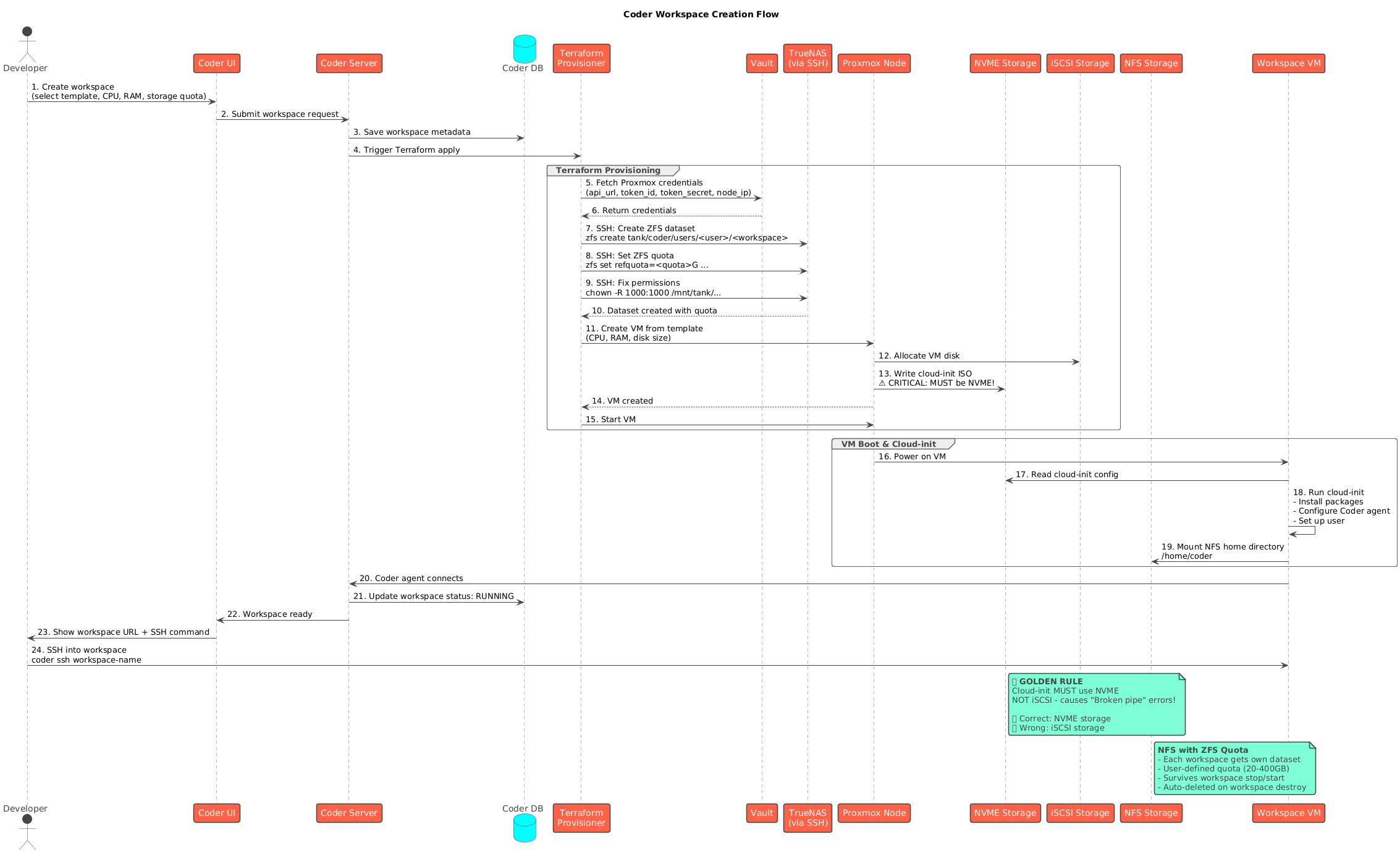

Workspace Creation Flow

When a developer creates a new workspace, several automated steps occur:

Provisioning Steps

- User Request: Developer selects a template and provides parameters (CPU, RAM, storage)

- Coder Orchestration: Server validates request and initiates Terraform job

- Credential Retrieval: Terraform fetches Proxmox credentials from Vault

- VM Creation: Proxmox provisions virtual machine with specified resources

- Storage Setup: TrueNAS creates ZFS dataset with quota and NFS export

- VM Configuration: Cloud-init configures VM and mounts NFS home directory

- Agent Connection: Coder agent starts and connects via WebSocket

- Ready State: Workspace becomes available for SSH/web IDE access

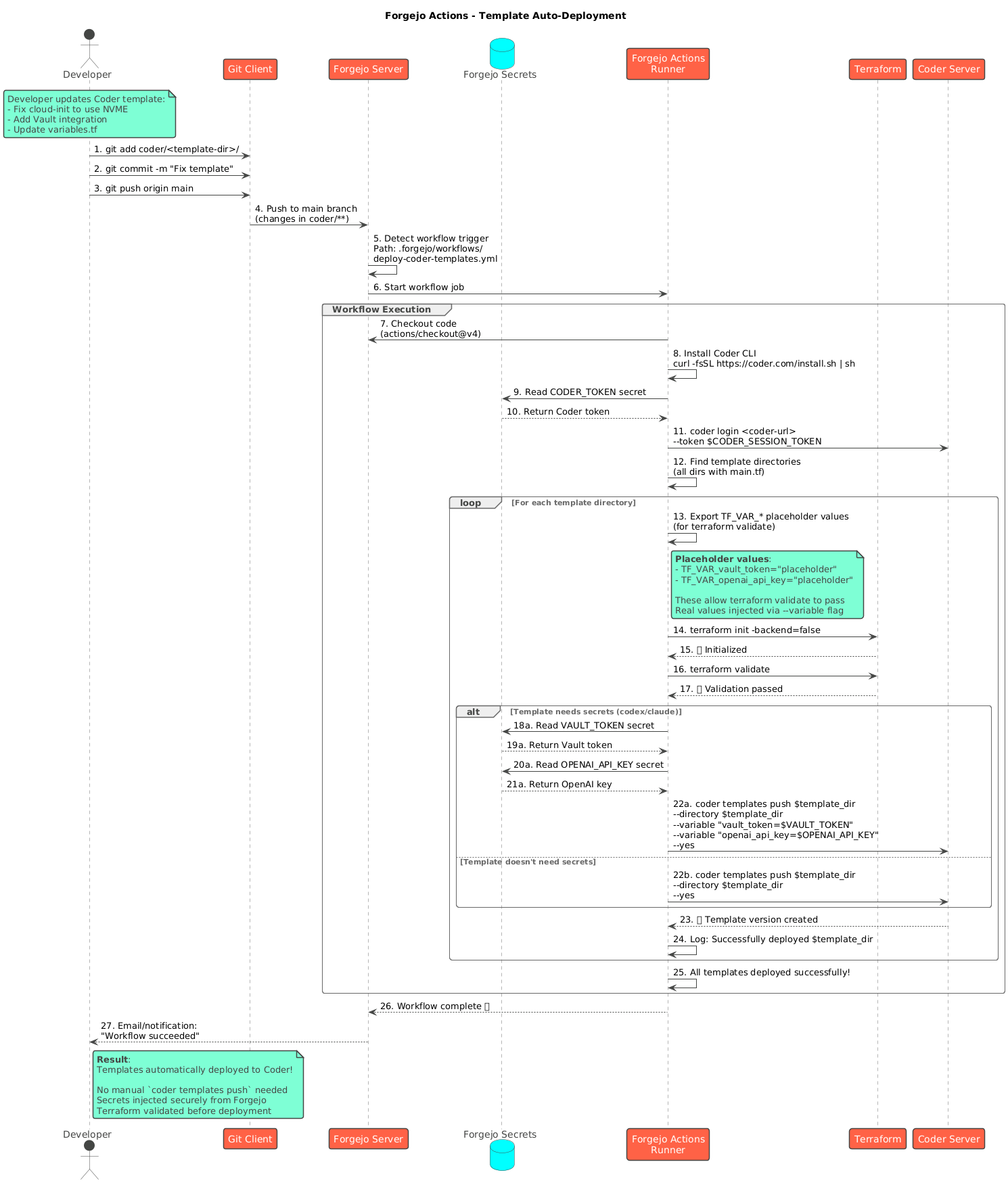

Template Auto-Deployment

Templates are version-controlled and automatically deployed via Forgejo Actions:

CI/CD Workflow

- Developer Push: Commit template changes to Git repository

- Webhook Trigger: Forgejo detects changes in template directories

- Validation: Terraform validates syntax and configuration

- Secret Injection: Forgejo secrets provide API credentials

- Template Push: Coder CLI deploys new template version

- Notification: Developer receives deployment confirmation

This ensures templates stay synchronized with Git and provides an audit trail for all changes.

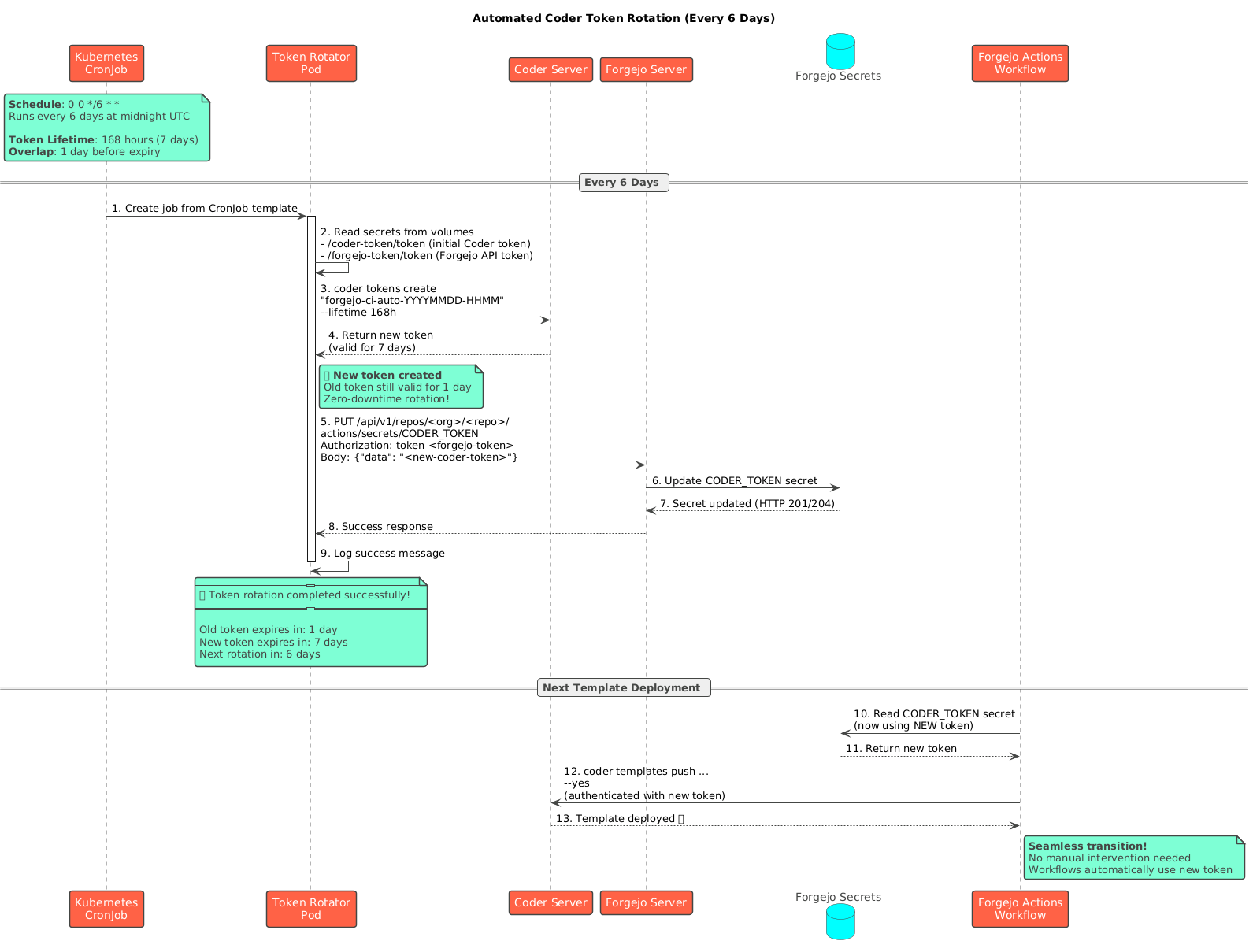

Token Rotation

Security is maintained through automated credential rotation:

Rotation Process

- Schedule: Kubernetes CronJob runs every 6 days

- Token Creation: Generate new Coder API token with 7-day lifetime

- Secret Update: Update Forgejo repository secrets via API

- Overlap Period: Old token remains valid for 1 day (zero-downtime)

- Next Rotation: Process repeats automatically

This ensures CI/CD pipelines never use expired credentials while maintaining security best practices.

AI Integration: Next-Level Development Experience

One of the most powerful aspects of the Coder platform is its seamless integration with AI-powered development tools. By providing consistent, remote development environments, Coder creates the perfect foundation for integrating advanced AI assistants that enhance developer productivity.

Aider and Claude in Every Workspace

Each workspace comes pre-configured with both Aider and Claude AI integration. This means developers can leverage AI-powered coding assistance directly within their development environment, regardless of their local machine setup.

What makes this powerful:

- Unified Environment: Both Aider and Claude work together in the same workspace

- Consistent Configuration: Every developer gets the same AI tooling setup

- Remote Execution: AI tools run on the workspace VM, not the developers laptop

- Persistent Context: AI assistance maintains context across sessions via NFS-backed storage

Coder Tasks: Clean AI Workflows

Coder provides a feature called Tasks that takes AI integration to the next level. Tasks allow developers to define custom commands and workflows that can be triggered directly from the Coder UI or CLI.

Benefits for AI Workflows:

- Streamlined Commands: Define tasks like “AI Code Review”, “Generate Tests”, “Refactor Module”

- Clean Interface: No need to remember complex CLI arguments or API endpoints

- Team Consistency: Share task definitions across the team via templates

- Audit Trail: All AI-assisted operations logged through Coders audit system

Example Task Definition:

1

2

3

4

5

6

7

8

tasks:

- name: "AI Code Review"

command: "aider --review --no-auto-commit"

description: "Run AI-powered code review on current changes"

- name: "Generate Unit Tests"

command: "aider --message Generate comprehensive unit tests for the current module"

description: "Use AI to generate test coverage"

This transforms AI coding assistance from a manual, ad-hoc process into a structured, repeatable workflow that integrates naturally with the development process.

The Power of Remote AI Integration

Running AI tools on remote workspaces instead of local machines provides significant advantages:

- Compute Flexibility: Scale workspace resources based on AI workload requirements

- Network Optimization: Direct connectivity between workspaces and AI API endpoints

- Credential Management: Centralized API key management through Vault integration

- Cost Control: Track AI API usage per workspace/team

- Consistent Performance: Developers arent limited by local machine capabilities

Implementation Considerations

The AI integration required careful planning and architecture:

- Token Authentication: Implemented proxy-based token authentication for remote AI services

- API Key Rotation: Integrated with Vault for secure, rotating API credentials

- Usage Tracking: Per-workspace metrics for AI API consumption

- Network Routing: Optimized paths for AI API calls to minimize latency

- Error Handling: Graceful degradation when AI services are unavailable

This level of integration required significant thought and engineering effort, but the result is a platform where AI assistance is a first-class feature, not an afterthought.

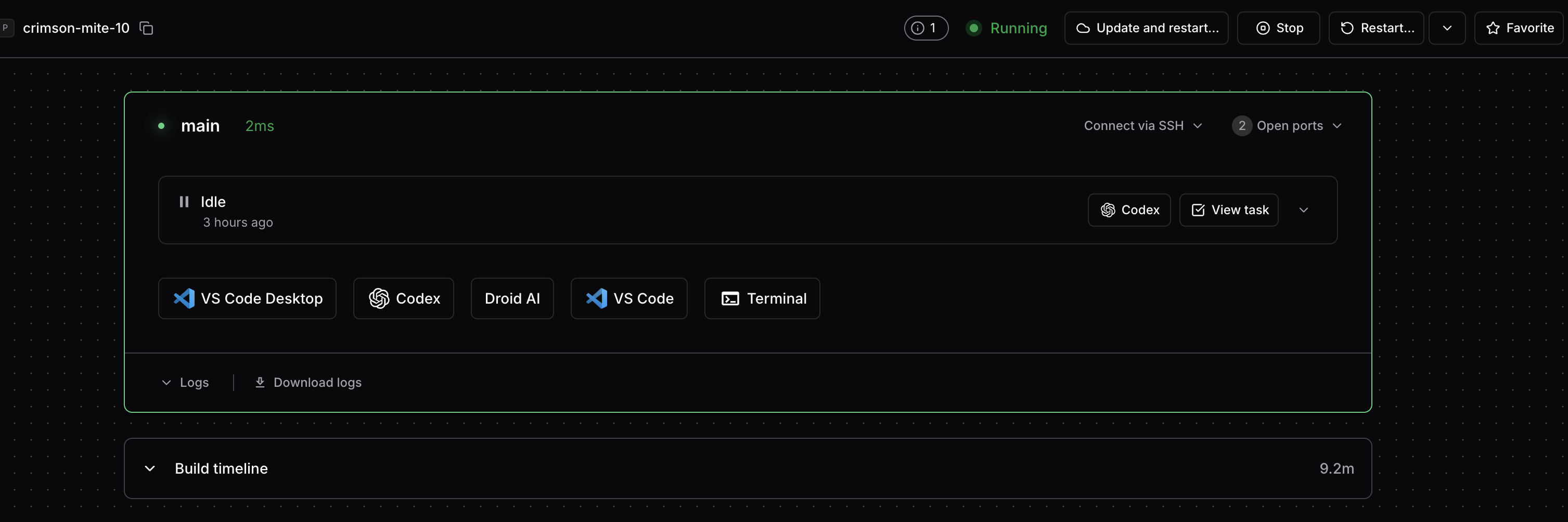

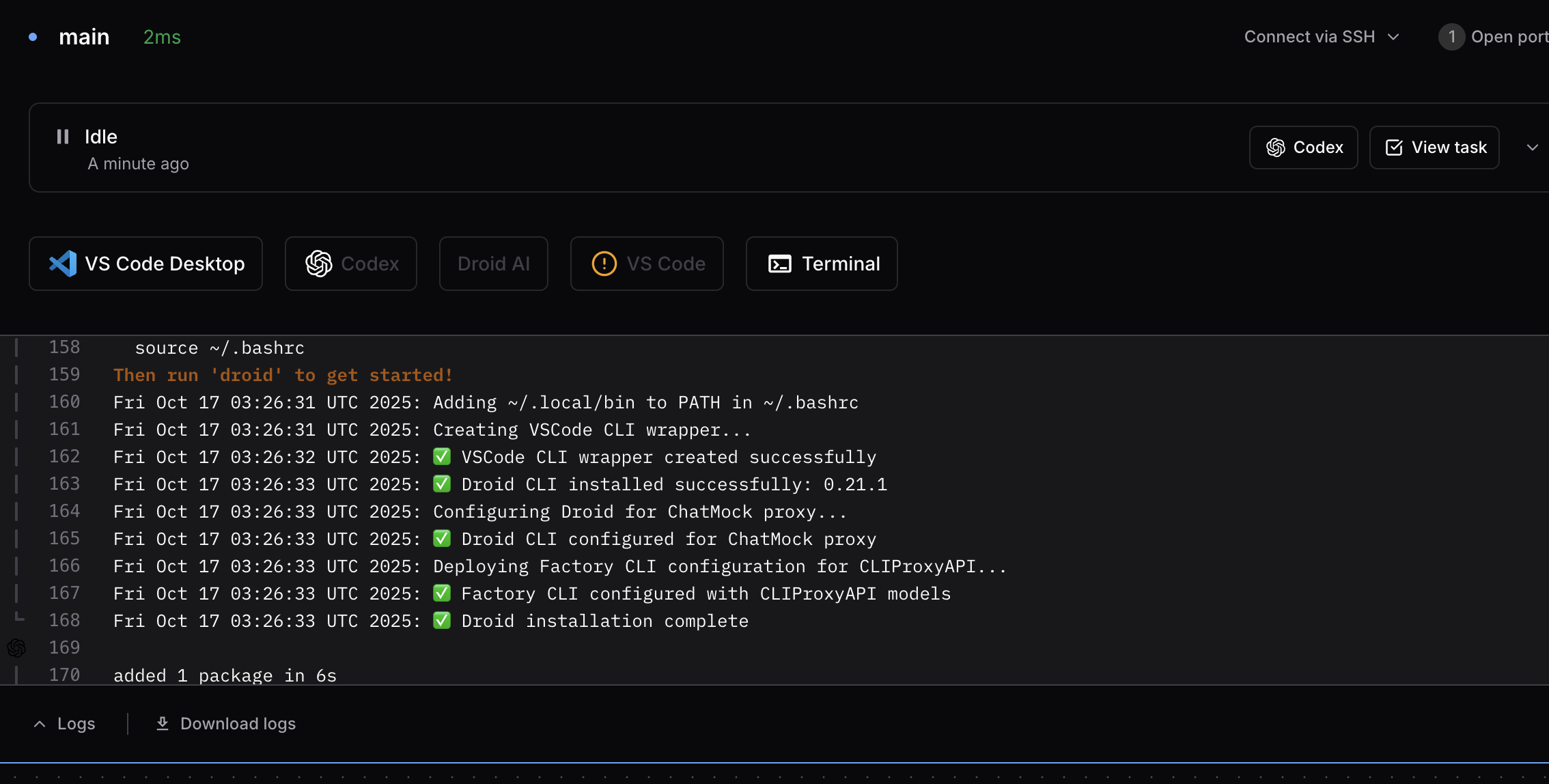

User Interface: Cloud-Like Experience

The Coder web UI provides an intuitive, cloud-service-like experience for managing workspaces:

Workspace dashboard showing running workspace with integrated tools: VS Code Desktop, Codex, Droid AI, VS Code, and Terminal - all accessible with one click

Workspace dashboard showing running workspace with integrated tools: VS Code Desktop, Codex, Droid AI, VS Code, and Terminal - all accessible with one click

Key UI Features:

- Workspace Status: Real-time status indicator (Running, Idle, Starting)

- Connection Method: SSH or web-based access

- Open Ports: Quick access to exposed services

- Integrated Tools: One-click access to development tools and AI assistants

- Build Timeline: Visual feedback on workspace provisioning progress

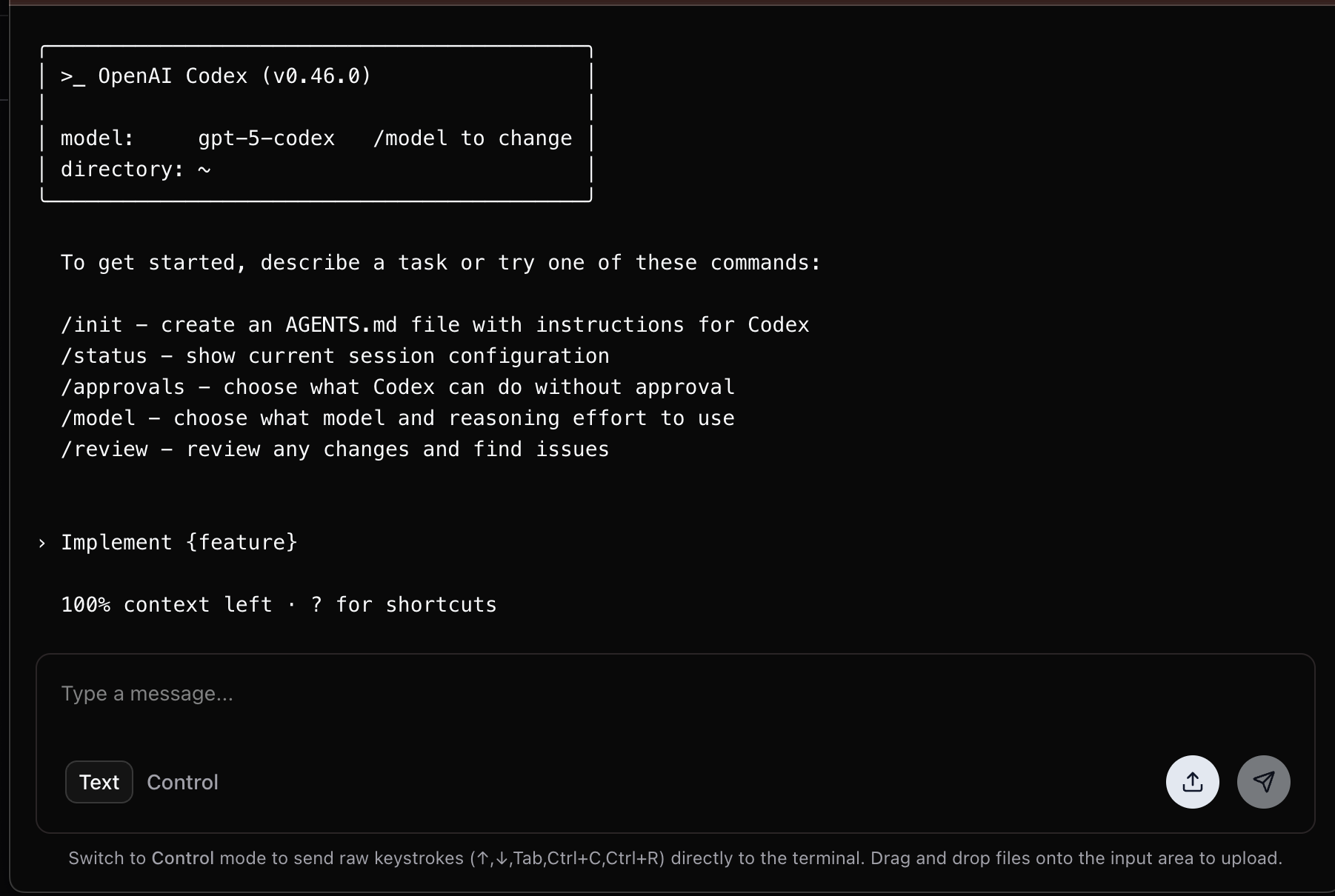

AI Integration in Action

AI-powered development tools are seamlessly integrated into every workspace:

OpenAI Codex running directly in the workspace terminal - ready to assist with code generation, reviews, and implementation tasks

OpenAI Codex running directly in the workspace terminal - ready to assist with code generation, reviews, and implementation tasks

The integration provides:

- Pre-configured AI tools: Codex, Aider, Claude, and Droid AI ready to use

- Context-aware assistance: AI tools have access to your entire codebase

- Multiple interaction modes: Terminal commands, editor integration, and task-based workflows

- Session persistence: AI context maintained across workspace sessions

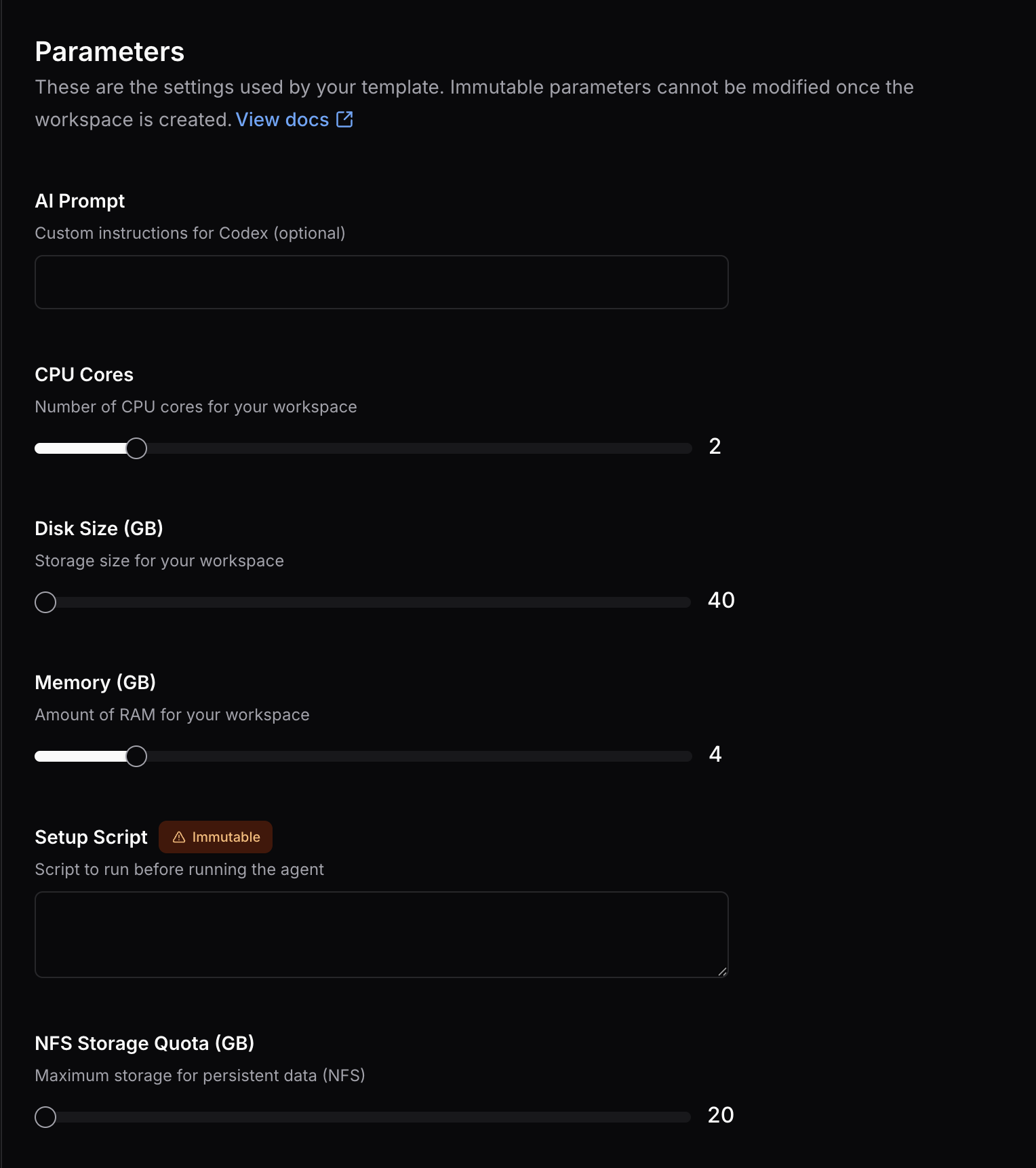

Parameter Selection: True Self-Service

Template parameters are presented as intuitive sliders and dropdowns, making resource selection feel like using a commercial cloud service:

Dynamic sliders for CPU cores, memory, disk size, and NFS storage quota - adjust resources instantly without infrastructure tickets

Dynamic sliders for CPU cores, memory, disk size, and NFS storage quota - adjust resources instantly without infrastructure tickets

Parameter UI Features:

- CPU Cores Slider: Visual selection from 2-16 cores with real-time value display

- Memory Slider: Choose RAM from 4GB-64GB based on workload needs

- Disk Size Slider: Allocate workspace disk from 20GB-200GB

- NFS Storage Quota Slider: Set persistent home directory quota from 20GB-500GB

- AI Prompt Field: Optional custom instructions for AI tools (Codex, Aider, etc.)

- Setup Script: Optional initialization script for workspace customization

- Immutable Parameters: Some settings locked after creation (marked with badge)

- Real-time Validation: Prevents invalid configurations before submission

The slider-based interface transforms infrastructure provisioning from a complex request process into an instant, self-service experience - no need to file tickets, wait for approvals, or understand infrastructure details.

MCP Server Architecture: Giving AI Specialized Tools

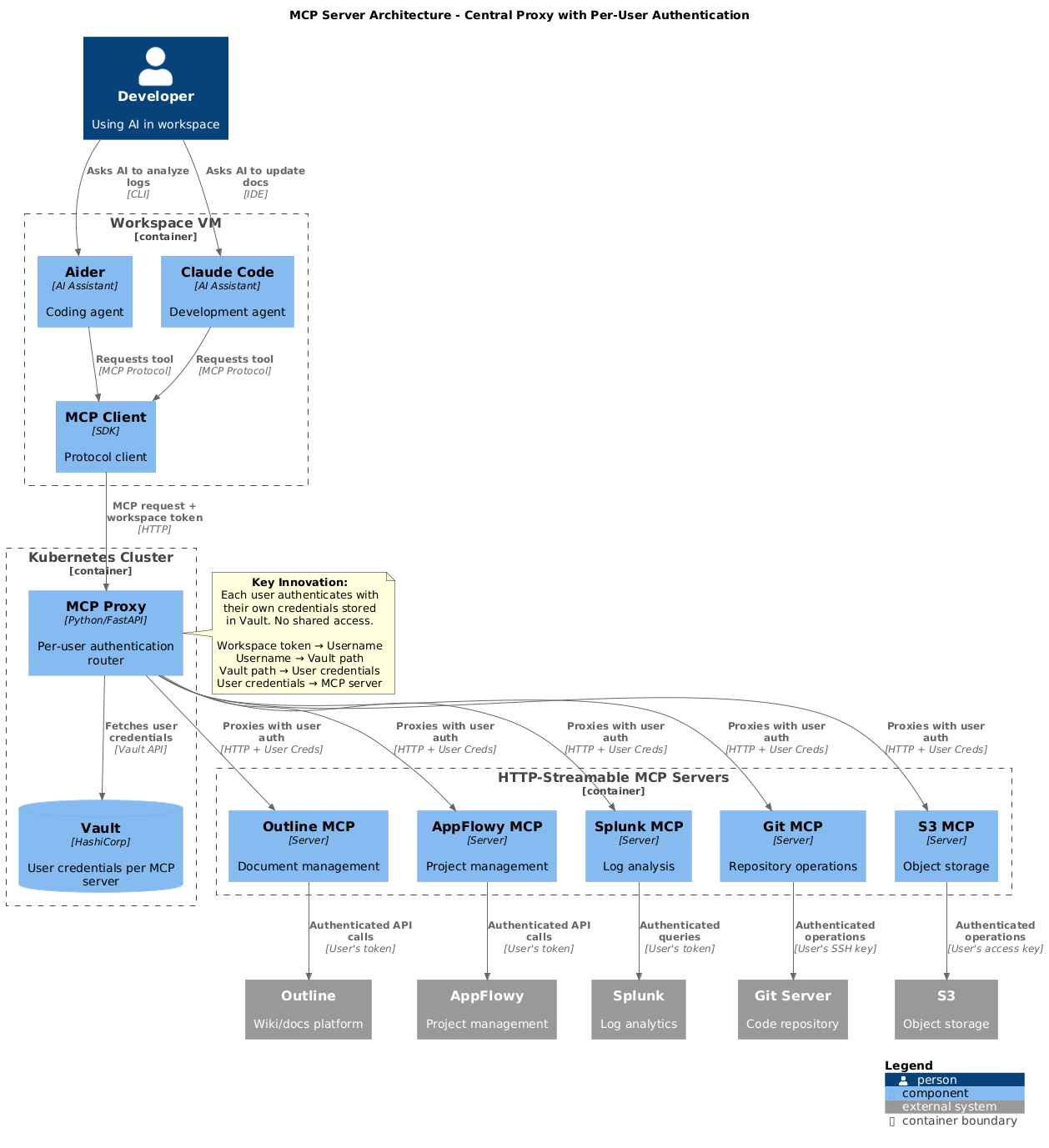

Central MCP Proxy architecture with per-user authentication and HTTP-streamable MCP servers

Central MCP Proxy architecture with per-user authentication and HTTP-streamable MCP servers

A revolutionary aspect of the platform is the integration of MCP (Model Context Protocol) servers - a standardized way to give AI models access to external tools, data sources, and services. This transforms AI from a simple chat interface into an intelligent agent that can interact with your entire development infrastructure.

What is MCP?

Model Context Protocol (MCP) is an open standard that allows AI models to:

- Invoke tools and functions (e.g., query databases, call APIs)

- Access data sources (e.g., documentation, code repositories, issue trackers)

- Interact with services (e.g., create documents, manage tasks, deploy code)

- Maintain context across multiple interactions

Think of MCP as giving AI eyes and hands - instead of just generating text, AI can read your documentation, query your databases, interact with your tools, and take actions on your behalf.

MCP Servers in Coder Workspaces

Each workspace can have multiple MCP servers running, each providing different capabilities:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

+=============================================================+

| AI Model (Claude, GPT-4, etc.) |

| Running in workspace via Aider/Codex |

+=============================================================+

| MCP Protocol

↓

+=============================================================+

| Central MCP Proxy (Per-User Authentication) |

| - Routes requests to appropriate MCP servers |

| - Validates user OAuth token |

| - Injects user credentials per MCP server |

+=============================================================+

|

+============+============+============+============+============+

↓ ↓ ↓ ↓ ↓

+============+ +============+ +============+ +============+ +============+

| Outline | | AppFlowy | | Splunk | | Git | | S3 |

| MCP | | MCP | | MCP | | MCP | | MCP |

+============+ +============+ +============+ +============+ +============+

↓ ↓ ↓ ↓ ↓

[User Auth] [User Auth] [User Auth] [User Auth] [User Auth]

Why HTTP-Streamable MCP Servers?

The platform uses HTTP-streamable MCP servers (not stdio/local MCP servers) for a critical reason: per-user authentication.

The Problem with Traditional MCP:

1

2

3

4

5

❌ Traditional Approach (stdio/local):

- MCP server runs locally with pre-configured credentials

- All users share the same MCP server instance

- Everyone has access to the same data/tools

- Security nightmare: one compromised workspace = everyone's data exposed

The Solution: HTTP-Streamable + Central Proxy:

1

2

3

4

5

6

✅ HTTP-Streamable Approach:

- Each MCP server is a network service (HTTP/SSE)

- Central MCP proxy authenticates each request

- User's OAuth token passed to MCP servers

- Each user accesses only THEIR data via THEIR credentials

- Zero shared access, complete isolation

Central MCP Proxy Architecture

The Central MCP Proxy is the key innovation that makes multi-user MCP deployment secure:

Architecture:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

Workspace (User: john.doe)

↓

AI Model makes request: "Search my Outline documents for Coder notes"

↓

Aider sends MCP request with workspace token

↓

+==============================================================+

| Central MCP Proxy |

| |

| 1. Validate workspace token |

| → Extract username: john.doe |

| |

| 2. Route to Outline MCP server |

| → HTTP POST to outline-mcp.internal |

| |

| 3. Inject user credentials |

| → Add header: X-User-Token: john.doe-outline-token |

| → Outline MCP uses john.doe's API key from Vault |

| |

| 4. Return results to workspace |

| → Stream response back to AI model |

+==============================================================+

↓

Outline MCP Server

→ Authenticates to Outline API using john.doe's token

→ Returns only documents john.doe has access to

→ AI sees only john.doe's Outline workspace

Security Benefits:

| Aspect | Traditional MCP | HTTP-Streamable + Proxy |

|---|---|---|

| Authentication | Shared credentials | Per-user OAuth tokens |

| Access Control | Everyone sees everything | User sees only their data |

| Audit Trail | No user attribution | Complete per-user logging |

| Revocation | Restart MCP server | Disable user’s OAuth token |

| Isolation | None | Complete workspace isolation |

MCP Server Examples

1. Outline MCP Server

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

// outline-mcp-server (HTTP-streamable)

// Provides AI access to Outline documentation

Tools provided to AI:

- search_documents(query: string): Search user's Outline docs

- get_document(id: string): Retrieve specific document

- create_document(title: string, content: string): Create new doc

- list_collections(): List user's collections

Example AI interaction:

User: "Search my Outline docs for Coder architecture notes"

AI: [Calls search_documents("Coder architecture")]

MCP Proxy: [Authenticates as john.doe, queries Outline API]

Result: Returns john.doe's Outline documents about Coder

AI: "I found 3 documents about Coder architecture..."

Per-User Authentication:

1

2

3

4

5

6

7

8

9

10

11

12

13

# MCP request includes workspace token

POST /mcp/outline/search

Headers:

X-Workspace-Token: john.doe:workspace-abc123

# MCP Proxy validates token and looks up Outline API key

john.doe → Vault → outline_api_key_john_doe

# Outline MCP uses john.doe's API key

GET https://outline.example.com/api/documents

Authorization: Bearer john_doe_api_key

# Returns only john.doe's accessible documents

2. AppFlowy MCP Server

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

// appflowy-mcp-server (HTTP-streamable)

// Provides AI access to AppFlowy workspaces (Notion-like)

Tools provided to AI:

- get_workspace(): Get user's AppFlowy workspace

- search_pages(query: string): Search pages and databases

- create_page(title: string): Create new page

- update_database(id: string, data: object): Update database rows

- get_kanban_board(id: string): Get project board

Example AI interaction:

User: "Show me tasks from my sprint board in AppFlowy"

AI: [Calls get_kanban_board("sprint-board")]

MCP Proxy: [Authenticates as john.doe, queries AppFlowy]

Result: Returns john.doe's AppFlowy sprint board data

AI: "You have 5 tasks in progress: 1. Implement auth..."

3. Splunk MCP Server

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

// splunk-mcp-server (HTTP-streamable)

// Provides AI access to Splunk data and searches

Tools provided to AI:

- search(query: string, timerange: string): Run SPL search

- get_saved_searches(): List user's saved searches

- get_dashboards(): List accessible dashboards

- create_alert(query: string, conditions: object): Create alert

Example AI interaction:

User: "Show me error rate for my app in the last hour"

AI: [Calls search("index=main app=myapp error | stats count", "1h")]

MCP Proxy: [Authenticates as john.doe with Splunk token]

Result: Returns Splunk search results john.doe can access

AI: "Your app had 47 errors in the last hour, mostly 500s..."

4. Git MCP Server (Forgejo)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

// git-mcp-server (HTTP-streamable)

// Provides AI access to Git repositories

Tools provided to AI:

- list_repos(): List user's repositories

- search_code(query: string): Search code across repos

- get_file(repo: string, path: string): Get file contents

- create_pr(repo: string, title: string, branch: string): Create PR

- list_issues(repo: string): List issues

Example AI interaction:

User: "Find all TODO comments in my coder-templates repo"

AI: [Calls search_code("TODO", repo="coder-templates")]

MCP Proxy: [Authenticates as john.doe to Forgejo]

Result: Returns TODO comments from john.doe's repo

AI: "Found 12 TODO comments across 5 files..."

5. S3 MCP Server

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

// s3-mcp-server (HTTP-streamable)

// Provides AI access to S3 object storage

Tools provided to AI:

- list_buckets(): List user's buckets

- list_objects(bucket: string): List objects in bucket

- upload_file(bucket: string, path: string, content: string): Upload

- download_file(bucket: string, path: string): Download

- create_presigned_url(bucket: string, path: string): Get shareable URL

Example AI interaction:

User: "Upload this diagram to my workspace S3 bucket"

AI: [Calls upload_file("john-doe-workspace", "diagrams/arch.png", data)]

MCP Proxy: [Authenticates as john.doe, gets S3 credentials]

Result: File uploaded to john.doe's personal bucket

AI: "Diagram uploaded successfully to your workspace bucket"

Automated MCP Server Deployment

MCP servers are deployed and managed automatically via scripts:

Deployment Script:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

#!/bin/bash

# deploy-mcp-servers.sh

# Automatically deploy MCP servers to Kubernetes

MCP_SERVERS=(

"outline-mcp"

"appflowy-mcp"

"splunk-mcp"

"git-mcp"

"s3-mcp"

)

for server in "${MCP_SERVERS[@]}"; do

echo "Deploying $server..."

# Build container image

docker build -t mcp-registry.local/$server:latest ./mcp-servers/$server

# Push to internal registry

docker push mcp-registry.local/$server:latest

# Deploy to Kubernetes

kubectl apply -f ./k8s-manifests/$server-deployment.yaml

# Update MCP Proxy routing configuration

kubectl exec -n coder mcp-proxy-0 -- \

mcp-proxy-ctl add-route $server http://$server.mcp-namespace.svc.cluster.local:8080

done

echo "All MCP servers deployed!"

Kubernetes Deployment Example:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

# outline-mcp-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: outline-mcp

namespace: mcp-servers

spec:

replicas: 2 # HA for reliability

selector:

matchLabels:

app: outline-mcp

template:

metadata:

labels:

app: outline-mcp

spec:

containers:

- name: outline-mcp

image: mcp-registry.local/outline-mcp:latest

ports:

- containerPort: 8080

name: http

env:

- name: VAULT_ADDR

value: "http://vault.vault.svc.cluster.local:8200"

- name: MCP_MODE

value: "http-streamable" # Not stdio!

---

apiVersion: v1

kind: Service

metadata:

name: outline-mcp

namespace: mcp-servers

spec:

selector:

app: outline-mcp

ports:

- port: 8080

targetPort: 8080

name: http

type: ClusterIP

Central MCP Proxy Configuration

MCP Proxy Routing Table:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

# mcp-proxy-config.yaml

routes:

- name: outline

upstream: http://outline-mcp.mcp-servers.svc.cluster.local:8080

auth:

type: oauth-vault

vault_path: secret/mcp/outline/{username}

- name: appflowy

upstream: http://appflowy-mcp.mcp-servers.svc.cluster.local:8080

auth:

type: oauth-vault

vault_path: secret/mcp/appflowy/{username}

- name: splunk

upstream: http://splunk-mcp.mcp-servers.svc.cluster.local:8080

auth:

type: oauth-vault

vault_path: secret/mcp/splunk/{username}

- name: git

upstream: http://git-mcp.mcp-servers.svc.cluster.local:8080

auth:

type: oauth-vault

vault_path: secret/mcp/git/{username}

# Proxy validates workspace token via Coder API

coder_api_url: https://coder.example.com/api/v2

MCP Proxy Request Flow:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

# Simplified MCP Proxy logic

async def handle_mcp_request(request):

# 1. Extract workspace token from request

workspace_token = request.headers.get("X-Workspace-Token")

# 2. Validate token with Coder API

username = await coder_api.validate_token(workspace_token)

if not username:

return {"error": "Invalid workspace token"}

# 3. Extract MCP server name from request path

mcp_server = request.path.split("/")[2] # /mcp/outline/search

# 4. Get user's credentials for this MCP server from Vault

vault_path = f"secret/mcp/{mcp_server}/{username}"

user_creds = await vault.read(vault_path)

# 5. Forward request to MCP server with user credentials

upstream_url = mcp_routes[mcp_server]["upstream"]

response = await http.post(

url=upstream_url + request.path,

headers={

"X-User-Credentials": user_creds,

"X-Username": username,

},

json=request.json

)

# 6. Stream response back to workspace

return response

MCP Server Configuration in Workspace

Aider Configuration:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

# ~/.aider/mcp.yaml in workspace

# AI tools automatically discover and use these MCP servers

mcp_servers:

outline:

url: https://mcp-proxy.example.com/mcp/outline

auth: workspace_token # Automatically injected by Coder agent

appflowy:

url: https://mcp-proxy.example.com/mcp/appflowy

auth: workspace_token

splunk:

url: https://mcp-proxy.example.com/mcp/splunk

auth: workspace_token

git:

url: https://mcp-proxy.example.com/mcp/git

auth: workspace_token

s3:

url: https://mcp-proxy.example.com/mcp/s3

auth: workspace_token

Automatically configured by Terraform template:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

resource "coder_agent" "main" {

# ... other config ...

startup_script = <<-EOT

# Configure MCP servers for AI tools

mkdir -p ~/.aider

cat > ~/.aider/mcp.yaml <<EOF

mcp_servers:

outline:

url: ${var.mcp_proxy_url}/mcp/outline

auth: $CODER_AGENT_TOKEN

appflowy:

url: ${var.mcp_proxy_url}/mcp/appflowy

auth: $CODER_AGENT_TOKEN

splunk:

url: ${var.mcp_proxy_url}/mcp/splunk

auth: $CODER_AGENT_TOKEN

git:

url: ${var.mcp_proxy_url}/mcp/git

auth: $CODER_AGENT_TOKEN

s3:

url: ${var.mcp_proxy_url}/mcp/s3

auth: $CODER_AGENT_TOKEN

EOF

# AI tools now have access to all MCP servers with user's credentials

EOT

}

Benefits of This Architecture

Security:

- ✅ Per-user authentication for all MCP servers

- ✅ No shared credentials across users

- ✅ Complete workspace isolation

- ✅ Credentials stored securely in Vault

- ✅ OAuth token revocation disables all MCP access

Developer Experience:

- ✅ AI can access user’s actual data (Outline docs, Splunk searches, etc.)

- ✅ No manual configuration - MCP servers auto-configured in workspace

- ✅ Seamless integration - AI tools discover servers automatically

- ✅ Rich tooling - AI has access to documentation, databases, APIs

Operational Excellence:

- ✅ Centralized deployment - all MCP servers in Kubernetes

- ✅ Automated updates - push new MCP servers via script

- ✅ High availability - MCP servers run with multiple replicas

- ✅ Audit trail - all MCP requests logged with username

Scalability:

- ✅ Add new MCP servers easily - just deploy and update proxy config

- ✅ Horizontal scaling - add more MCP proxy instances as needed

- ✅ Per-user rate limiting - prevent abuse

- ✅ Caching - frequently accessed data cached per user

Real-World Use Case: AI-Powered Development Workflow

Scenario: Developer working on a feature, using AI with full MCP integration

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

+==================+ +==========================+

| Developer | | AI Assistant |

| (Workspace) | | (with MCP Access) |

+==================+ +==========================+

| |

| "Help me implement |

| user authentication" |

|===============================================>|

| |

| | [1] Search Outline MCP

| | 📚 Docs

| | ↓

| | "Found: Authentik OIDC guide"

| |

| | [2] Search Git MCP

| | 🔍 Code

| | ↓

| | "Found: auth.go in user-service"

| |

| | [3] Query Splunk MCP

| | 📊 Logs

| | ↓

| | "23 auth failures: invalid_grant"

| |

| | [4] Check AppFlowy MCP

| | 📋 Tasks

| | ↓

| | "Task assigned to you, due Friday"

| |

| Response with full context: |

| - Documentation reference |

| - Code examples |

| - Error analysis |

| - Task deadline |

|<===============================================|

| |

| "Create PR for my |

| auth implementation" |

|===============================================>|

| |

| | [5] Git MCP: Create PR

| | ✅ PR #47 opened

| |

| | [6] AppFlowy MCP: Update task

| | ✅ Moved to "In Review"

| |

| | [7] S3 MCP: Upload diagram

| | ✅ auth-flow-diagram.png

| |

| ✅ PR created, task updated, diagram uploaded |

|<===============================================|

| |

+=====================================================================================+

| MCP Server Access |

| |

| 📚 Outline MCP 🔍 Git MCP 📊 Splunk MCP 📋 AppFlowy MCP 💾 S3 MCP |

| (Docs) (Code) (Logs) (Tasks) (Files) |

| |

| All authenticated as the developer |

| All scoped to developer's permissions |

+=====================================================================================+

This is AI-augmented development done right - the AI has access to your documentation, code, logs, tasks, and tools. Authentication is handled through proper RBAC (Role-Based Access Control):

- AI Service Identity: For Git operations, CI/CD, and external integrations, the AI uses its own service account identity with scoped permissions (configured through Authentik/Vault)

- User Context: For workspace operations like editing files or running commands, the AI operates in your workspace context but actions are auditable

- RBAC Enforcement: Authentik provides fine-grained permission control - you can restrict what AI service accounts can access

- Production Security: In production deployments, AI service accounts have limited, least-privilege access - no full user permissions

- Audit Trail: All AI actions are logged with service account attribution, not masked as user actions

Example: When AI commits to Git, it uses a dedicated “ai-assistant” service account, not your personal credentials. When AI updates Plane tasks, it can use your identity (with your approval) or a service account depending on RBAC policy.

Future MCP Server Integrations

Planned MCP Servers:

- Plane MCP: Open source project management - create/update issues, epics, and sprints

- Mattermost MCP: Team messaging and collaboration (planned)

- Database MCP: Query PostgreSQL/MySQL with user’s DB credentials

- Kubernetes MCP: View pods, logs, deployments for user’s namespaces

- Terraform MCP: Read state, plan infrastructure changes

- Monitoring MCP: Query Prometheus/Grafana with user-scoped metrics

The MCP server architecture transforms AI from a code generator into an intelligent development assistant that can interact with your entire development infrastructure, all while maintaining strict per-user authentication and security.

Data Persistence: The NFS Architecture That Changes Everything

The Problem with Ephemeral VMs

By default, Coder treats workspace VMs as ephemeral - when you stop, restart, or rebuild a workspace, the VM is destroyed and recreated from scratch. This is fantastic for ensuring clean, reproducible environments, but it creates a critical challenge: where does your data go?

Traditional solutions involve:

- Local VM storage: Lost on every rebuild

- Git repositories: Only code, not your entire development environment

- Manual backups: Time-consuming and error-prone

For a production-grade developer platform, we needed something better: true persistence that survives VM destruction while maintaining the clean-slate benefits of ephemeral infrastructure.

The Solution: External NFS + ZFS Dataset Lifecycle Management

The breakthrough came from separating compute (ephemeral VMs) from storage (persistent NFS mounts). Here’s how it works:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

┌─────────────────────────────────────────────────────────────────┐

│ Workspace Lifecycle │

├─────────────────────────────────────────────────────────────────┤

│ │

│ Workspace Created: │

│ ┌─────────────────────────────────────────────────────────┐ │

│ │ 1. Terraform: Run truenas-dataset-manager.sh create │ │

│ │ → Creates ZFS dataset: tank/coder/users/john/ws1 │ │

│ │ → Sets quota: 100GB (user-configurable) │ │

│ │ → Creates NFS share for dataset │ │

│ │ → Sets ownership: uid 1000, gid 1000 │ │

│ │ │ │

│ │ 2. Proxmox: Provision VM from template │ │

│ │ → CPU: 4 cores (slider: 1-8) │ │

│ │ → RAM: 8GB (slider: 2-32GB) │ │

│ │ → Disk: 40GB local (slider: 20-100GB) │ │

│ │ │ │

│ │ 3. Cloud-init: Mount NFS on first boot │ │

│ │ → mount 192.168.x.x:/mnt/tank/coder/users/john/ws1 │ │

│ │ → /home/${username} │ │

│ │ → All user data now on persistent NFS │ │

│ └─────────────────────────────────────────────────────────┘ │

│ │

│ Workspace Stopped/Started/Rebuilt: │

│ ┌─────────────────────────────────────────────────────────┐ │

│ │ VM Destroyed → Recreated from template │ │

│ │ NFS Dataset: UNTOUCHED - still exists on TrueNAS │ │

│ │ On boot: Re-mounts same NFS share │ │

│ │ Result: All files, configs, history preserved │ │

│ └─────────────────────────────────────────────────────────┘ │

│ │